I recently came across the need to host a .NET Core web app as a Windows Service. In this case, it was because each machine needed to locally be running an API. But it’s actually pretty common to have a web interface to manage an application on a PC without needing to set up IIS. For example if you install a build/release management tool such as Jenkins or TeamCity, it has a web interface to manage the builds and this is able to be done without the need for installing and configuring an additional web server on the machine.

Luckily .NET Core actually has some really good tools for accomplishing all of this (And even some really awesome stuff for being able to run a .NET Core web server by double clicking an EXE if that’s your thing).

A Standalone .NET Core Website/Web Server

The first step actually has nothing to do with Windows Services. If you think about it, all a Windows Service is, is a managed application that’s hidden in the background, will restart on a machine reboot, and if required, will also restart on erroring. That’s it! So realistically what we first want to do is build a .NET Core webserver that can be run like an application, and then later on we can work out the services part.

For the purpose of this tutorial, I’m just going to be using the default template for an ASP.net Core website. The one that looks like this:

We first need to head to the csproj file of our project and add in a specific runtime (Or multiple), and an output type. So overall my csproj file ends up looking like:

<Project Sdk="Microsoft.NET.Sdk.Web">

<PropertyGroup>

<TargetFramework>netcoreapp2.1</TargetFramework>

<RuntimeIdentifiers>win10-x64;</RuntimeIdentifiers>

<OutputType>Exe</OutputType>

</PropertyGroup>

<ItemGroup>

<PackageReference Include="Microsoft.AspNetCore.App" />

</ItemGroup>

</Project>

Our RuntimeIdentifiers (And importantly notice the “s” on the end there) specifies the runtimes our application can be built for. In my case I’m building only for Windows 10, but you could specify other runtime monkiers if required.

Ontop of this, we specify that we want an outputtype of exe, this is so we can have a nice complete exe to run rather than using the “dotnet run” command to start our application. I’m not 100% sure, but the exe output that comes out of this I think is simply a wrapper to boot up the actual application dll. I noticed this because when you change code and recompile, the exe doesn’t change at all, but the dll does.

Now we need to be able to publish the app as a standalone application. Why standalone? Because then it means any target machine doesn’t have to have the .NET Core runtime installed to get everything running. Ontop of that, there is no “what version do you have installed?” type talk. It’s just double click and run.

To publish a .NET Core app as standalone, you need to run the following command from the project directory in a command prompt/powershell:

dotnet publish --configuration Release --self-contained -r win10-x64

It should be rather self explanatory. We are doing a publish, using the release configuration, we pass through the self contained flag, and we pass through that the runtime we are building for is Windows 10 – 64 Bit.

From your project directory, you can head to: \bin\Release\netcoreapp2.1\win10-x64\publish

This contains your application exe as well as all framework DLL’s to run without the need for a runtime to be installed on the machine. It’s important to note that you should be inside the Publish folder. One level up is also an exe but this is not standalone and relies on the runtime being installed.

From your publish folder, try double clicking yourapplication.exe.

Hosting environment: Production

Content root path: \bin\Release\netcoreapp2.1\win10-x64\publish

Now listening on: http://localhost:5000

Now listening on: https://localhost:5001

Application started. Press Ctrl+C to shut down.

In your browser head to http://localhost:5000 and you now have your website running from an executable. You can copy and paste this publish folder onto any Windows 10 machine, even a fresh install, and have it spin up a webserver hosting your website. Pretty impressive!

Installing As A Window Service

So the next part of this tutorial is actually kinda straight forward. Now that you have an executable that hosts your website, installing it as a service is exactly the same as setting up any regular application as a service. But we will try and have some niceties to go along with it.

First we need to do a couple of code changes for our app to run both as a service, and still be OK running as an executable (Both for debugging purposes, and in case we want to run in a console window and not as a service).

We need to install the following from your package manager console:

Install-Package Microsoft.AspNetCore.Hosting.WindowsServices

Next we need to go into our program.exe and make your main method look like the following:

public static void Main(string[] args)

{

var isService = !(Debugger.IsAttached || args.Contains("--console"));

var builder = CreateWebHostBuilder(args.Where(arg => arg != "--console").ToArray());

if (isService)

{

var pathToExe = Process.GetCurrentProcess().MainModule.FileName;

var pathToContentRoot = Path.GetDirectoryName(pathToExe);

builder.UseContentRoot(pathToContentRoot);

}

var host = builder.Build();

if (isService)

{

host.RunAsService();

}

else

{

host.Run();

}

}

This does a couple of things :

-

It checks whether we are using the debugger, or if we have a console argument of “–console” passed in.

-

If neither of the above are true, it sets the content root manually back to where the exe is running. This is specifically for the service runtime.

-

Next if we are a service, we use a special “RunAsService()” method that .NET Core gives us

-

Otherwise we just do a “Run()” as normal.

Obviously the main point of this is that if the debugger is attached (e.g. we are running from visual studio), or we run from a command prompt with the flag “–console”, it’s going to run exactly the same as before. Back in the day we used to have to run the service with a 10 second sleep at the start of the app, and quickly try and attach the debugger to the process before it kicked off to be able to set breakpoints etc. Now it’s just so much easier.

Now let’s actually get this thing installed!

In your project in Visual Studio (Or your favourite editor) add a file called install.bat to your project. The contents of this file should be:

sc create MyService binPath= %~dp0MyService.exe

sc failure MyService actions= restart/60000/restart/60000/""/60000 reset= 86400

sc start MyService

sc config MyService start=auto

Obviously replace MyService with the name of your service, and be sure to rename the exe to the actual name of your applications exe. Leave the %~dp0 part as this refers to the current batch path (Allowing you to just double click the batch file when you want to install).

The install file creates the service, sets up failure restarts (Although these won’t really be needed), starts the service, and sets the service to auto start in the future if the machine reboots for any reason.

Go ahead and create an uninstall.bat file in your project. This should look like:

sc stop MyService

timeout /t 5 /nobreak > NUL

sc delete MyService

Why the timeout? I sometimes found that it took a while to stop the service, and so giving it a little bit of a break inbetween stopping and deleting helped it along it’s way.

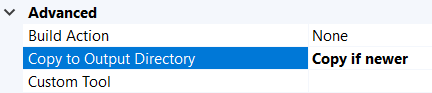

Important! For both of these files, be sure to set them up so they copy to the output directory in Visual Studio. Without this, your bat files won’t output to your publish directory.

Go ahead and publish your application again using our command from earlier:

dotnet publish --configuration Release --self-contained -r win10-x64

Now in your publish directory, you will find your install and uninstall bat files. You will need to run both of these as Administrator for them to work as installing Windows Services requires elevated access. A good idea is that the first time you run these, you run them from a command prompt so you can catch any errors that happen.

Once installed, you should be able to browse to http://localhost:5000 and see your website running silently in the background. And again, the best part is when you restart your machine, it starts automatically. Perfect!