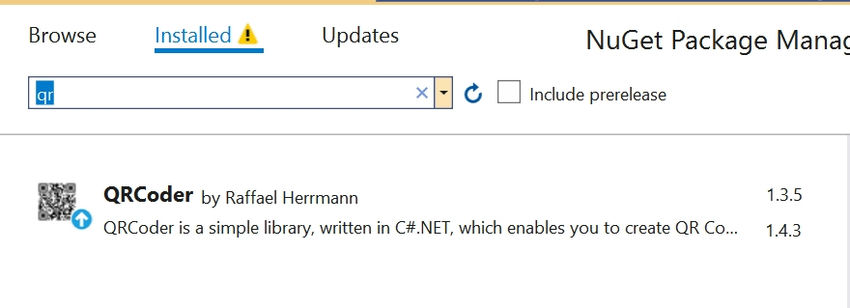

To generate a QR code with text surrounding it in ASP.Net, use the nuget package listed below. We can use zxing.net or the QRCoder free nuget package. In this case, we'll use the QRCoder package.

Install the QRCoder package on our project. We are good to go now.

Generate QR Code Sample

In Below code snippet we are using QRCodeGenerator Class to generate QRCode, create an instance for QRCodeGenerator(),

QRCodeData qrCodeData = qrGenerator.CreateQrCode(text, QRCodeGenerator.ECCLevel.Q)

It will Create QRCode Data for the given text.

ECCLevel

Error Correction Level of the QR code. It represents the ability of the QR code to remain readable even if it's partially damaged or obscured.

L (Low): About 7% of the codewords can be restored.

M (Medium): About 15% of the codewords can be restored.

Q (Quartile): About 25% of the codewords can be restored.

H (High): About 30% of the codewords can be restored.

It will create QRCode for QR Code data,

QRCode qrCode = new QRCode(qrCodeData);

Converting QR Code into bitmap

GetGraphic(int x);

x: Pixel rate for that QR Code

And we have plenty of override methods for GetGraphic() for different use case scenarios.

Bitmap bitMap = qrCode.GetGraphic(20)

Converting bitmap to base64 string

In bitMap.Save() helps us to save the bitmap in image format.

System.Drawing.Imaging.ImageFormat: we can choose which format we would like to save our image, like (PNG, GIF, JPEG, Icons....etc).

And we are converting our memorystream to byte array then we can convert it into string with base64.

System.IO.MemoryStream ms = new System.IO.MemoryStream();

bitMap.Save(ms, System.Drawing.Imaging.ImageFormat.Png);

byte[] byteImage = ms.ToArray();

string base64String = Convert.ToBase64String(byteImage);

Generate Url for QR Code Image

We can define data while returning from API calls, or we can handle that on the UI side.

ImageUrl = "data:image/png;base64," + base64String;

If we just return base64String.

<img [src]="'data:image/png;base64,'+ base64String" />

Sample for Generate QR Code with text around it.

In Below Sample GetGraphic(7, Color.Black, Color.White, null, 7, 3).

Bitmap GetGraphic(int pixelsPerModule, Color darkColor, Color lightColor, Bitmap icon = null, int iconSizePercent = 15, int iconBorderWidth = 6, bool drawQuietZones = true).

7: pixelsPerModule

Black: darkColor

White: lightColor

null: icon

7: iconSizePercent

3: iconBorderWidth

public string GenerateRecurringQRCode(string key){

string result = string.Empty;

QRCodeGenerator qrGenerator = new QRCodeGenerator()

QRCodeData qrCodeData = qrGenerator.CreateQrCode(key, QRCodeGenerator.ECCLevel.Q)

using (QRCode qrCode = new QRCode(qrCodeData))

{

using (Bitmap bitMap = qrCode.GetGraphic(7, Color.Black, Color.White, null, 7, 3))

{

using (MemoryStream ms = new MemoryStream())

{

Bitmap qrCodeWithText = AddTextToQRCode(bitMap); // Adding Text to QR Code

qrCodeWithText.Save(ms, System.Drawing.Imaging.ImageFormat.Png);

byte[] byteImage = ms.ToArray();

result = Convert.ToBase64String(byteImage);

}

}

}

return result;

}

In this code snippet below we have drawn a DO NOT SCREENSHOT QR CODE around qr code AddTextToQRCode();

static Bitmap AddTextToQRCode(Bitmap bitMap)

{

RectangleF rectf1 = new RectangleF(26, 8, 350, 100);

RectangleF rectf2 = new RectangleF(26, 263, 350, 100);

Graphics g = Graphics.FromImage(bitMap);

g.SmoothingMode = SmoothingMode.AntiAlias;

g.InterpolationMode = InterpolationMode.HighQualityBicubic;

g.PixelOffsetMode = PixelOffsetMode.HighQuality;

g.DrawString("DO NOT SCREENSHOT QR CODE", new Font("Arial Black", 10, FontStyle.Bold), Brushes.Red, rectf1);

g.DrawString("DO NOT SCREENSHOT QR CODE", new Font("Arial Black", 10, FontStyle.Bold), Brushes.Red, rectf2);

Rectangle recleft = new Rectangle();

recleft.Height = 200;

recleft.Width = 500;

recleft.X = 0;

recleft.Y = 0;

SizeF s;

String str = "DO NOT SCREENSHOT QR CODE";

StringFormat strf = new StringFormat();

strf.Alignment = StringAlignment.Center;

recleft.X = 0;

recleft.Y = 0;

g.TranslateTransform(8, 393);

g.RotateTransform(-90);

g.DrawString(str, new Font("Arial Black", 10, FontStyle.Bold), Brushes.Red, recleft, strf);

g.ResetTransform();

Rectangle recright = new Rectangle();

recright.Height = 200;

recright.Width = 500;

recright.X = 0;

recright.Y = 0;

SizeF sright;

String strright = "DO NOT SCREENSHOT QR CODE";

StringFormat strfright = new StringFormat();

strf.Alignment = StringAlignment.Center;

recright.X = 0;

recright.Y = 0;

g.TranslateTransform(280, -104);

g.RotateTransform(90);

g.DrawString(str, new Font("Arial Black", 10, FontStyle.Bold), Brushes.Red, recright, strf);

g.ResetTransform();

g.Flush();

return bitMap;

}

let Break down the above code snippet.

In this, we have created to rectangle to place our text top and bottom

RectangleF rectf1 = new RectangleF(x, y, width, height);

RectangleF rectf1 = new RectangleF(26, 8, 350, 100);

The X-coordinate of the top-left corner of the rectangle is 26.

The Y-coordinate of the top-left corner of the rectangle is 8.

The width of the rectangle is 350.

The height of the rectangle is 100.

RectangleF rectf1 = new RectangleF(26, 8, 350, 100);

RectangleF rectf2 = new RectangleF(26, 263, 350, 100);

Converting bitmap to graphics and setting quality

- SmoothingMode: This mode helps to smooth the edges of rendered shapes, resulting in a higher quality appearance, especially when drawing lines or curves.

- InterpolationMode: The object will interpolate (or estimate) colors and shapes when scaling or rotating images. HighQualityBicubic typically produces smoother results compared to other interpolation modes.

- PixelOffSetMode: This mode determines how pixels are offset during rendering operations, which can affect the positioning and clarity of rendered objects.

Graphics g = Graphics.FromImage(bitMap);

g.SmoothingMode = SmoothingMode.AntiAlias;

g.InterpolationMode = InterpolationMode.HighQualityBicubic;

g.PixelOffsetMode = PixelOffsetMode.HighQuality;

Draw String in red color and a bold Arial Black font at the positions defined by rectf1 and rectf2 on the QR code image.

void DrawString(string s, Font font, Brush brush, RectangleF layoutRectangle)

s: "DO NOT SCREENSHOT QR CODE"

Font: new Font("Arial Black", 10, FontStyle.Bold) We are using Arial Black font and font size 10, fontstyle bold

Brush: Color of the text we are about to paint (RED)

layoutRectangle: position and size to a rectangle.

g.DrawString("DO NOT SCREENSHOT QR CODE", new Font("Arial Black", 10, FontStyle.Bold), Brushes.Red, rectf1);

g.DrawString("DO NOT SCREENSHOT QR CODE", new Font("Arial Black", 10, FontStyle.Bold), Brushes.Red, rectf2);

In this, we have created a rectangle to place our text left and right.

In the code snippet below, we have placed text on the left.

Rectangle recleft = new Rectangle();: This line creates a new Rectangle object named recleft.

recleft.Height = 200;: Sets the height of the rectangle to 200.

recleft.Width = 500;: Sets the width of the rectangle to 500.

String str = "DO NOT SCREENSHOT QR CODE";: Initializes a string variable str with the text "DO NOT SCREENSHOT QR CODE".

StringFormat strf = new StringFormat();: Creates a new StringFormat object named strf.

strf.Alignment = StringAlignment.Center;: Sets the alignment of the text to center.

recleft.X = 0;: Resets the X-coordinate of the rectangle to 0 (this seems redundant as it was set earlier).

recleft.Y = 0;: Resets the Y-coordinate of the rectangle to 0 (again, redundant).

g.TranslateTransform(8, 393);: Translates the origin of the graphics object by (8, 393) pixels or moves the origin of the drawing surface to a specified point.

g.RotateTransform(-90);: Rotates the graphics object counterclockwise by 90 degrees.

g.DrawString(str, new Font("Arial Black", 10, FontStyle.Bold), Brushes.Red, recleft, strf);: Draws the string str using a specified font, brush, and rectangle. The text is drawn with the previously set rotation and translation.

g.ResetTransform();: Resets the transformation matrix of the graphics object to its identity matrix, undoing the translation and rotation applied earlier.

Rectangle recleft = new Rectangle();

recleft.Height = 200;

recleft.Width = 500;

recleft.X = 0;

recleft.Y = 0;

String str = "DO NOT SCREENSHOT QR CODE";

StringFormat strf = new StringFormat();

strf.Alignment = StringAlignment.Center;

g.TranslateTransform(8, 393);

g.RotateTransform(-90);

g.DrawString(str, new Font("Arial Black", 10, FontStyle.Bold), Brushes.Red, recleft, strf);

g.ResetTransform();

let do the same for right side.

Rectangle recright = new Rectangle();

recright.Height = 200;

recright.Width = 500;

recright.X = 0;

recright.Y = 0;

String strright = "DO NOT SCREENSHOT QR CODE";

StringFormat strfright = new StringFormat();

strf.Alignment = StringAlignment.Center;

g.TranslateTransform(280, -104);

g.RotateTransform(90);

g.DrawString(str, new Font("Arial Black", 10, FontStyle.Bold), Brushes.Red, recright, strf);

g.ResetTransform();

Flushes any pending graphics operations to ensure that all drawing operations are completed and return bit map to GenerateQRCode() method.

g.Flush();

return bitMap;

Note. If you like to change text you need to alter rectangle and size.

Hope this will be helpful.