The following is tutorial how to run code before and after MVC request pipeline in ASP.NET Core.

Solution

In an empty project update Startup class to add services and middleware for MVC:

public void ConfigureServices

(IServiceCollection services)

{

services.AddMvc();

}

public void Configure(

IApplicationBuilder app,

IHostingEnvironment env)

{

app.UseMvc(routes =>

{

routes.MapRoute(

name: "default",

template: "{controller=Home}/{action=Index}/{id?}");

});

}

Add the class to implement filter:

public class ParseParameterActionFilter : Attribute, IActionFilter

{

public void OnActionExecuting(ActionExecutingContext context)

{

object param;

if (context.ActionArguments.TryGetValue("param", out param))

context.ActionArguments["param"] = param.ToString().ToUpper();

else

context.ActionArguments.Add("param", "I come from action filter");

}

public void OnActionExecuted(ActionExecutedContext context)

{

}

}

In the Home controller add an action method that uses Action filter:

[ParseParameterActionFilter]

public IActionResult ParseParameter(string param)

{

return Content($"Hello ParseParameter. Parameter: {param}");

}

Browse to /Home/ParseParameter, you’ll see:

Discussion

Filter runs after an action method has been selected to execute. MVC provides built-in filters for things like authorisation and caching. Custom filters are very useful to encapsulate reusable code that you want to run before or after action methods.

Filters can short-circuit the result i.e. stops the code in your action from running and return a result to the client. They can also have services injected into them via service container, which makes them very flexible.

Filter Interfaces

Creating a custom filter requires implementing an interface for the type of filter you require. There are two flavours of interfaces for most filter type, synchronous and asynchronous:

public class HelloActionFilter : IActionFilter

{

public void OnActionExecuting(ActionExecutingContext context)

{

// runs before action method

}

public void OnActionExecuted(ActionExecutedContext context)

{

// runs after action method

}

}

public class HelloAsyncActionFilter : IAsyncActionFilter

{

public async Task OnActionExecutionAsync(

ActionExecutingContext context,

ActionExecutionDelegate next)

{

// runs before action method

await next();

// runs after action method

}

}

You can short-circuit the filter pipeline by setting the Result (of type IActionResult) property on context parameter (for Async filters don’t call the next delegate):

public class SkipActionFilter : Attribute, IActionFilter

{

public void OnActionExecuting(ActionExecutingContext context)

{

context.Result = new ContentResult

{

Content = "I'll skip the action execution"

};

}

public void OnActionExecuted(ActionExecutedContext context)

{ }

}

[SkipActionFilter]

public IActionResult SkipAction()

{

return Content("Hello SkipAction");

}

For Result filters you could short-circuit by setting the Cancel property on context parameter and sending a response:

public void OnResultExecuting(ResultExecutingContext context)

{

context.Cancel = true;

context.HttpContext.Response.WriteAsync("I'll skip the result execution");

}

[SkipResultFilter]

public IActionResult SkipResult()

{

return Content("Hello SkipResult");

}

Filter Attributes

MVC provides abstract base classes that you can inherit from to create custom filters. These abstract classes inherit from Attribute class and therefore can be used to decorate controllers and action methods:

-

ActionFilterAttribute

-

ResultFilterAttribute

-

ExceptionFilterAttribute

-

ServiceFilterAttribute

-

TypeFilterAttribute

Filter Types

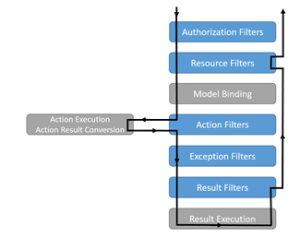

There are various type of filters that run at different stages of the filter pipeline. Below a figure from official documentation illustrates the sequence:

Authorization

This is the first filter to run and short circuits request for unauthorised users. They only have one method (unlike most other filters that have Executing and Executed methods). Normally you won’t write your own Authorization filters, the built-in filter calls into framework’s authorisation mechanism.

Resource

They run before model binding and can be used for changing how it behaves. Also they run after the result has been generated and can be used for caching etc.

Action

They run before and after the action method, thus are useful to manipulate action parameters or its result. The context supplied to these filters let you manipulate the action parameters, controller and result.

Exception

They can be used for unhandled exception before they’re written to the response. Exception handling middleware works for most scenarios however this filter can be used if you want to handle errors differently based on the invoked action.

Result

They run before and after the execution of action method’s result, if the result was successful. They can be used to manipulate the formatting of result.

Filter Scope

Filters can be added at different levels of scope: Action, Controller and Global. Attributes are used for action and controller level scope. For globally scoped filters you need to add them to filter collection of MvcOptions when configuring services in Startup:

services.AddMvc(options =>

{

// by instance

options.Filters.Add(new AddDeveloperResultFilter("Tahir Naushad"));

// by type

options.Filters.Add(typeof(GreetDeveloperResultFilter));

});

Filters are executed in a sequence:

1. The Executing methods are called first for Global > Controller > Action filters.

2. Then Executed methods are called for Action > Controller > Global filters.

Filter Dependency Injection

In order to use filters that require dependencies injected at runtime, you need to add them by Type. You can add them globally (as illustrated above), however, if you want to apply them to action or controller (as attributes) then you have two options:

ServiceFilterAttribute

This attributes retrieves the filter using service container. To use it:

Create a filter that uses dependency injection:

public class GreetingServiceFilter : IActionFilter

{

private readonly IGreetingService greetingService;

public GreetingServiceFilter(IGreetingService greetingService)

{

this.greetingService = greetingService;

}

public void OnActionExecuting(ActionExecutingContext context)

{

context.ActionArguments["param"] =

this.greetingService.Greet("James Bond");

}

public void OnActionExecuted(ActionExecutedContext context)

{ }

}

Add filter to service container:

services.AddScoped<GreetingServiceFilter>();

Apply it using ServiceFilterAttribute:

[ServiceFilter(typeof(GreetingServiceFilter))]

public IActionResult GreetService(string param)

TypeFilterAttribute

This attributes doesn’t need registering the filter in service container and initiates the type using ObjectFactory delegate. To use it:

Create a filter that uses dependency injection:

public class GreetingTypeFilter : IActionFilter

{

private readonly IGreetingService greetingService;

public GreetingTypeFilter(IGreetingService greetingService)

{

this.greetingService = greetingService;

}

public void OnActionExecuting(ActionExecutingContext context)

{

context.ActionArguments["param"] = this.greetingService.Greet("Dr. No");

}

public void OnActionExecuted(ActionExecutedContext context)

{ }

}

Apply it using TypeFilterAttribute:

[TypeFilter(typeof(GreetingTypeFilter))]

public IActionResult GreetType1(string param)

You could also inherit from TypeFilterAttribute and then use without TypeFilter:

public class GreetingTypeFilterWrapper : TypeFilterAttribute

{

public GreetingTypeFilterWrapper() : base(typeof(GreetingTypeFilter))

{ }

}

[GreetingTypeFilterWrapper]

public IActionResult GreetType2(string param)