ASP.NET Core is a robust and adaptable framework for developing web apps and APIs. When developing a RESTful API, it is critical to define a clear and standardized interface for seamless integration with client applications. OpenAPI, formerly Swagger, is a complete solution for creating, documenting, and implementing APIs in ASP.NET Core. In this post, we will look at the best practices for developing an ASP.NET Core REST API with OpenAPI in order to ensure consistency, scalability, and maintainability.

Specify API Requirements

Before we begin development, we must first precisely outline the API's needs. Examine the specific functionalities that must be exposed, the data that must be handled, and the expected replies.

A well-defined API specification will provide a solid foundation for creating our OpenAPI API.

An example of how to define API requirements for a hypothetical "Task Management API" with OpenAPI. Assuming we're creating an API for task management, let's go over some fundamental criteria.

Define the Functions

Specify the features that our API must provide. Consider these essential functionalities in this example, which are listed below.

Make a task list.

Obtain information about a certain assignment.

Make a new task.

An current task should be updated.

Remove a task.

Create Data Structures

Define the data structures (models) that will be handled by our API. The models we'll utilize in this example are listed below.

components:

schemas:

Task:

type: object

properties:

id:

type: integer

format: int64

title:

type: string

description:

type: string

dueDate:

type: string

format: date

Endpoints must be defined.

Create endpoints for each capability, each with its own set of HTTP methods, request bodies (if any), and response models.

paths:

/tasks:

get:

summary: Get a list of tasks.

responses:

'200':

description: Successful response.

content:

application/json:

schema:

type: array

items:

$ref: '#/components/schemas/Task'

post:

summary: Create a new task.

requestBody:

required: true

content:

application/json:

schema:

$ref: '#/components/schemas/Task'

responses:

'201':

description: Task created successfully.

content:

application/json:

schema:

$ref: '#/components/schemas/Task'

/tasks/{taskId}:

get:

summary: Get details of a specific task.

parameters:

- name: taskId

in: path

required: true

schema:

type: integer

format: int64

responses:

'200':

description: Successful response.

content:

application/json:

schema:

$ref: '#/components/schemas/Task'

put:

summary: Update an existing task.

parameters:

- name: taskId

in: path

required: true

schema:

type: integer

format: int64

requestBody:

required: true

content:

application/json:

schema:

$ref: '#/components/schemas/Task'

responses:

'200':

description: Task updated successfully.

content:

application/json:

schema:

$ref: '#/components/schemas/Task'

delete:

summary: Delete a task.

parameters:

- name: taskId

in: path

required: true

schema:

type: integer

format: int64

responses:

'204':

description: Task deleted successfully.

We provide a clear and disciplined foundation for our API development process by specifying API requirements with OpenAPI. The offered example demonstrates how to create functions, data structures, and endpoints for a "Task Management API." Our actual API design would expand on these ideas, taking into account authentication, error handling, query parameters, and other factors.

Remember that OpenAPI allows us to accurately specify our API needs, making it easier to communicate them to our development team and guarantee that everyone is on the same page before we begin working.

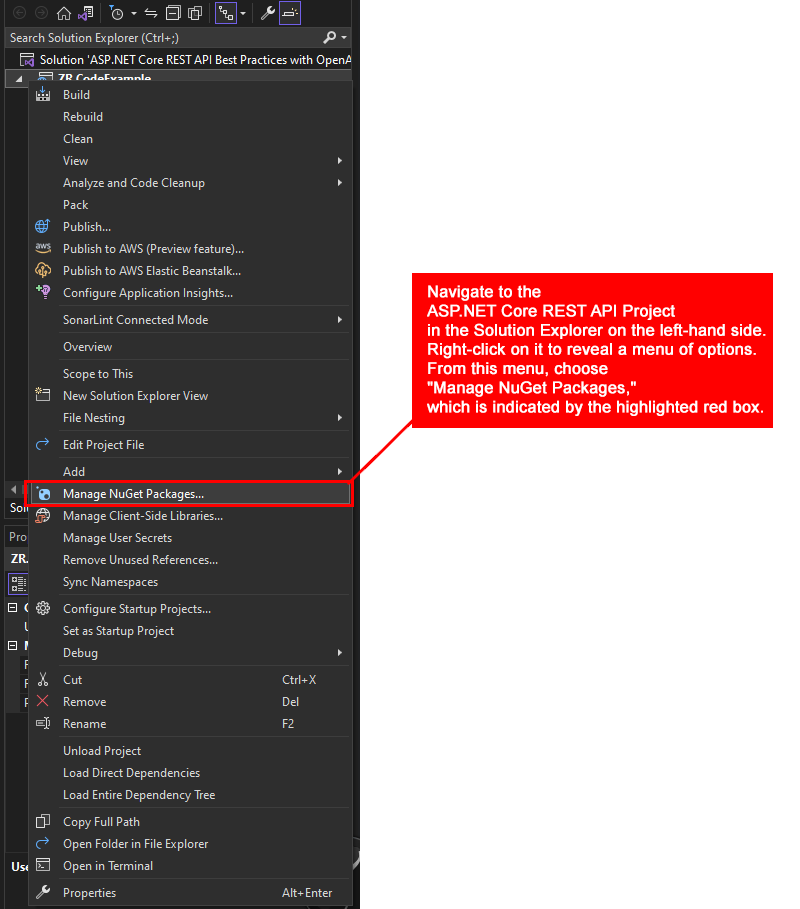

Install OpenAPI Tools

To begin using OpenAPI in ASP.NET Core, we must first install the necessary NuGet packages. Swashbuckle is a pirate.For integrating OpenAPI into our project, we commonly use the ASP.NETCore package. We can use the NuGet Package Manager or the Package Manager Console to install it. The graphic below shows how to install it using the NuGet Package Manager.

NuGet Package Access in ASP.NET Core API Project via Solution Explorer.

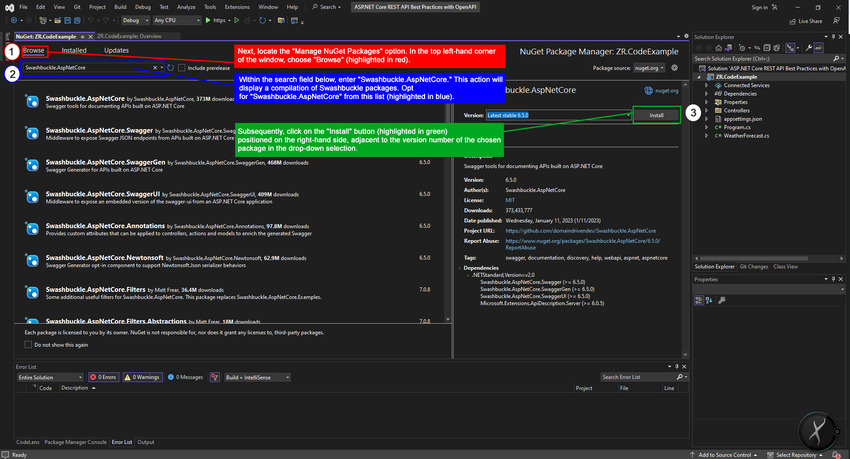

The instructions below will show us how to install the Swashbuckle.Manage NuGet Package Manager to install AspNetCore.

Step 2. Initiate by finding the essential "Manage NuGet Packages" option, located at the window's top left corner. Click on "Browse" (highlighted in red) below, and type "Swashbuckle.AspNetCore" in the search field. The search will display various Swashbuckle packages. Choose "Swashbuckle.AspNetCore" (highlighted in blue) and then click the "Install" button (highlighted in green) on the right, next to the chosen package's version. This completes the process.

Enable OpenAPI in Program

As we proceed through this part, our attention will be drawn to enhancing the capabilities of OpenAPI within our application. With this goal in mind, we will begin the process of enabling smooth interaction with OpenAPI. This critical phase is configuring the components required for our application to successfully exploit the power of OpenAPI. We will open up a world of increased documentation and interaction opportunities for our application's API in the future steps.

Configure the OpenAPI services and middleware in the Program.cs file. In the ConfigureServices and Configure methods, add the following code.

Step 1: Set up the Services Method

// Configure Services method

builder.Services.AddSwaggerGen(c =>

{

c.SwaggerDoc("v1", new OpenApiInfo { Title = "Best Practices for Creating ASP.NET Core REST API using OpenAPI by Peter", Version = "v1" });

});

Step 2. Configure Method

// Configure method

app.UseSwagger();

app.UseSwaggerUI(c =>

{

c.SwaggerEndpoint("/swagger/v1/swagger.json", "Peter Demo API V1");

});

Design API with RESTful Principles

As we embark on the journey of designing our API, it's imperative to embrace the core tenets of RESTful principles. These principles serve as the foundation for creating an API that not only aligns with industry best practices but also facilitates seamless interaction and comprehension.

In this meticulous process, each API endpoint is meticulously crafted, bearing in mind the essence of nouns for resource identification and HTTP verbs for defining actions. This approach lends a level of clarity and consistency that greatly enhances the user experience.

GET /api/users

Action: Retrieve a list of users

Description: This endpoint serves to fetch a comprehensive list of users within the system. It adheres to the RESTful principle of using the HTTP GET verb to retrieve data.

GET /api/users/{id}

Action: Retrieve a specific user by ID

Description: By including the user's unique identifier (ID) in the endpoint, we enable the retrieval of precise user details. The RESTful nature of the design leverages the HTTP GET verb for this purpose.

POST /api/users

Action: Create a new user

Description: This endpoint facilitates the addition of a new user to the system. Employing the HTTP POST verb aligns with RESTful principles, as it signifies the act of creating a resource.

PUT /api/users/{id}

Action: Update an existing user by ID

Description: Through this endpoint, we empower the modification of user information. The specific user is identified by their unique ID. The RESTful approach is upheld by employing the HTTP PUT verb for resource updating.

DELETE /api/users/{id}

Action: Delete a user by ID

Description: By utilizing this endpoint, users can be removed from the system. The targeted user is pinpointed by their ID. In accordance with RESTful principles, the HTTP DELETE verb is employed for resource deletion.

A meticulous approach to API design ensures that our endpoints not only facilitate meaningful actions but also adhere to the robust RESTful framework, enriching our API's usability and comprehensibility.

Use Data Transfer Objects (DTOs)

In our quest to establish seamless communication between clients and the API, we embrace the prowess of Data Transfer Objects (DTOs). These robust constructs serve as data containers, ensuring a structured and controlled exchange of information. Unlike exposing our intricate domain models directly, DTOs assume the role of intermediaries, proficiently governing access to data.

By wielding this strategic approach, we fortify security and mitigate the potential vulnerability of overexposing sensitive data. DTOs epitomize a sophisticated layer that safeguards the integrity of our data and promotes encapsulation.

In this code example, we draw inspiration from the "Task Management API" we've encountered.

// Original domain model

public class TaskModel

{

public int Id { get; set; }

public string Title { get; set; }

public string Description { get; set; }

public DateTime DueDate { get; set; }

}

// Data Transfer Object (DTO)

public class TaskDto

{

public int Id { get; set; }

public string Title { get; set; }

public DateTime DueDate { get; set; }

}

We have created a DTO called TaskDto that encapsulates the communication properties. Note that the Description property is omitted because DTOs provide an efficient method of sharing data. With DTOs, we can optimize the communication process and safeguard sensitive aspects of our domain model by orchestrating a controlled and purpose-driven flow of data.

In the end, Data Transfer Objects represent a strategic move toward robust communication that maintains a delicate balance between access and security.

Validate Request Data

Within the realm of building a resilient API, the cardinal principle of data integrity stands tall. This entails rigorous validation of incoming request data, an indispensable safeguard against potential security vulnerabilities and data discrepancies. The journey toward a secure and reliable API begins with meticulous validation practices underpinned by the synergy of data annotations and custom validation logic.

In the ASP.NET Core landscape, a robust validation paradigm serves as a bulwark against data inconsistencies and unauthorized access. The integration of data validation holds particular significance when harmonized with the power of OpenAPI, effectively ensuring that only legitimate and correctly structured data enters our API.

Step 1. Employing Data Annotations

Data annotations, inherent within ASP.NET Core, emerge as a formidable tool to imbue request data with an aura of reliability. Through the strategic placement of attributes, we assert validation rules that guide the permissible format and constraints of incoming data.

In this code example, we will understand how data annotations can be applied to a DTO in conjunction with our TaskModel example.

using System.ComponentModel.DataAnnotations;

public class TaskDto

{

public int Id { get; set; }

[Required(ErrorMessage = "Title is required.")]

public string Title { get; set; }

[DataType(DataType.Date)]

public DateTime DueDate { get; set; }

}

Step 2. Crafting Custom Validation Logic

For scenarios that transcend the realm of data annotations, custom validation logic takes the lead. By extending the ValidationAttribute class, we can create tailor-made validation rules that resonate with our API's unique requirements.

In this code example below, let's consider a custom validation attribute that ensures the due date is in the future.

using System;

using System.ComponentModel.DataAnnotations;

public class FutureDateAttribute : ValidationAttribute

{

public override bool IsValid(object value)

{

if (value is DateTime date)

{

return date > DateTime.Now;

}

return false;

}

}

Step 3. Integrating with OpenAPI

The fusion of data validation with OpenAPI crystallizes in the validation constraints, becoming an integral part of our API's documentation. When a client consumes our API through the OpenAPI documentation, they are guided by these constraints, thus minimizing the chances of invalid or erroneous requests.

By coupling data validation with OpenAPI, we're forging a path of data integrity and security that resonates through every interaction with our API. The result is a fortified ecosystem where reliable and validated data forms the bedrock of seamless communication.

In this code example below, the TaskDto class is annotated with data validation attributes, ensuring that the data adheres to defined rules. The CreateTask action method employs ModelState.IsValid to verify the validity of incoming data. If validation fails, a BadRequest response is returned, including the validation errors.

using Microsoft.AspNetCore.Mvc;

using Microsoft.OpenApi.Models;

namespace TaskManagementAPI.Controllers

{

[ApiController]

[Route("api/[controller]")]

public class TasksController : ControllerBase

{

// ...

[HttpPost]

public ActionResult<TaskDto> CreateTask(TaskDto taskDto)

{

if (!ModelState.IsValid)

{

return BadRequest(ModelState);

}

// Process valid data and create the task

// ...

return Ok("Task created successfully");

}

}

public class TaskDto

{

public int Id { get; set; }

[Required(ErrorMessage = "Title is required.")]

public string Title { get; set; }

[DataType(DataType.Date)]

public DateTime DueDate { get; set; }

}

}

Remember the keep important when this API is documented using OpenAPI, the validation constraints specified in the TaskDto class become part of the documentation. Clients accessing our API via the OpenAPI documentation are equipped with the knowledge of exactly what data is expected and the validation criteria it must satisfy. This synergy between data validation and OpenAPI augments the reliability of data interactions and ensures a secure communication channel for our API.

Step 4. Leveraging Built-in Validation Features

ASP.NET Core graciously equips developers with a suite of built-in validation features. These intrinsic capabilities work in synergy with OpenAPI, yielding a seamless integration that bolsters the API's robustness.

Within our controller actions, we can invoke the ModelState.IsValid property to effortlessly validate incoming request data. This dynamic property gauges the validity of the request data based on the applied data annotations and custom validation logic.

In this code example, we illustrative excerpt from our controller methods.

[HttpPost]

public ActionResult<TaskDto> CreateTask(TaskDto taskDto)

{

if (!ModelState.IsValid)

{

return BadRequest(ModelState);

}

// Process valid data and create the task

// ...

}

By embracing this methodology, our API empowers itself to efficiently scrutinize incoming data, weed out discrepancies, and respond to invalid data with grace.

Step 5. Enhancing Data Integrity Through Documentation

When data validation is harmonized with OpenAPI, its impact extends beyond mere code execution. It becomes a cornerstone of our API's documentation. Every validation rule, be it a data annotation or custom logic, is vividly presented within the OpenAPI documentation. This empowers developers, whether they are consuming or contributing to our API, to understand the parameters of valid data exchange.

With meticulous validation, our API's documentation serves as a comprehensive guide for clients to interact securely and effectively. Each interaction is facilitated by a robust validation process that inherently safeguards data integrity.

In essence, the process of data validation, when intertwined with OpenAPI, creates a symbiotic relationship where data integrity, security, and comprehensibility thrive in harmony. This holistic approach ensures that our API not only functions as intended but does so with a profound commitment to security and reliability.

In this code example below, our TaskDto class is annotated with data validation attributes, just as before. Additionally, a custom OpenApiDefinitions class is created to provide information for the OpenAPI documentation. This class is used to define details such as the API's title, version, description, and contact information.

using Microsoft.AspNetCore.Mvc;

using Microsoft.OpenApi.Models;

using System;

using System.ComponentModel.DataAnnotations;

namespace TaskManagementAPI.Controllers

{

[ApiController]

[Route("api/[controller]")]

public class TasksController : ControllerBase

{

// ...

[HttpPost]

public ActionResult<TaskDto> CreateTask(TaskDto taskDto)

{

if (!ModelState.IsValid)

{

return BadRequest(ModelState);

}

// Process valid data and create the task

// ...

return Ok("Task created successfully");

}

}

public class TaskDto

{

public int Id { get; set; }

[Required(ErrorMessage = "Title is required.")]

public string Title { get; set; }

[DataType(DataType.Date)]

public DateTime DueDate { get; set; }

}

// OpenAPI documentation

public class OpenApiDefinitions

{

public OpenApiInfo Info {

By integrating data validation with OpenAPI, we ensure that the validation rules are an integral part of our API's documentation. When clients access our API through the OpenAPI documentation, they have a clear understanding of the validation criteria for each data attribute. This alignment between validation and documentation fosters secure and effective interactions, reinforcing data integrity throughout the API ecosystem.

Step 6. Handling Validation Errors Gracefully

Validation is a two-way street. While it ensures data integrity, it also necessitates efficient error handling when data doesn't meet the defined criteria. This engagement between validation and error handling is crucial to create a user-friendly experience for clients.

Within our controller actions, we can further customize our responses to address validation errors. This provides clients with clear insights into what went wrong and how they can rectify it.

[HttpPost]

public ActionResult<TaskDto> CreateTask(TaskDto taskDto)

{

if (!ModelState.IsValid)

{

var validationErrors = ModelState.Where(e => e.Value.Errors.Any())

.ToDictionary(k => k.Key, v => v.Value.Errors.Select(e => e.ErrorMessage));

return BadRequest(validationErrors);

}

// Process valid data and create the task

// ...

}

By enriching the response with detailed error messages, we empower clients to rectify the issues efficiently, leading to smoother interactions and a positive user experience.

Step 7.The Power of Continuous Improvement

The beauty of embracing data validation within the OpenAPI context is its adaptability. As our API evolves, so can our validation rules. With OpenAPI serving as the documentation layer, changes in validation are seamlessly reflected, providing clients with up-to-date expectations for data exchange.

By nurturing a culture of continuous improvement, we ensure that our API's validation mechanisms align with the ever-changing landscape of data security and integrity.

In this code example below, we've introduced a custom validation attribute FutureDateAttribute that validates if a date is in the future. This showcases how the validation logic can evolve and adapt to changing requirements.

using Microsoft.AspNetCore.Mvc;

using Microsoft.OpenApi.Models;

using System;

using System.ComponentModel.DataAnnotations;

namespace TaskManagementAPI.Controllers

{

[ApiController]

[Route("api/[controller]")]

public class TasksController : ControllerBase

{

// ...

[HttpPost]

public ActionResult<TaskDto> CreateTask(TaskDto taskDto)

{

if (!ModelState.IsValid)

{

return BadRequest(ModelState);

}

// Process valid data and create the task

// ...

return Ok("Task created successfully");

}

}

public class TaskDto

{

public int Id { get; set; }

[Required(ErrorMessage = "Title is required.")]

public string Title { get; set; }

[DataType(DataType.Date)]

[FutureDate(ErrorMessage = "Due date must be in the future.")]

public DateTime DueDate { get; set; }

}

// Custom validation attribute for future date

public class FutureDateAttribute : ValidationAttribute

{

public override bool IsValid(object value)

{

if (value is DateTime date)

{

return date > DateTime.Now;

}

return false;

}

}

// OpenAPI documentation

public class OpenApiDefinitions

{

public OpenApiInfo Info { get; } = new OpenApiInfo

{

Title = "Task Management API",

Version = "v1",

Description = "An API for managing tasks with data validation integrated.",

Contact = new OpenApiContact

{

Name = "Our Name",

Email = "[email protected]"

}

};

}

}

By nurturing a culture of continuous improvement, our API's validation mechanisms remain in alignment with the dynamic landscape of data security and integrity. As we update our validation rules, OpenAPI's role as a documentation layer ensures that clients are always informed of the latest expectations for data exchange. This dynamic harmony between validation and documentation enhances the reliability of our API over time.

It is the journey of data validation within the realm of OpenAPI a holistic endeavor that encapsulates meticulous design, execution, documentation, and adaptability. By weaving together these facets, we create an API ecosystem that's fortified by validation, poised for secure data exchanges, and dedicated to offering a refined experience to both clients and developers.

Document API with Descriptive Comments

In the meticulous endeavor of crafting an API that stands as a beacon of clarity and functionality, the act of documentation assumes a pivotal role. At the heart of this process lies the use of descriptive comments—a mechanism through which we articulate the essence of our API endpoints, parameters, and responses. These comments don't merely serve as annotations; they are the pillars upon which the comprehensibility and usability of our API stand. The symbiosis between these descriptive comments and the power of OpenAPI furnishes an automated mechanism for generating API documentation. With each carefully crafted comment, we set the stage for developers to seamlessly comprehend the intricacies of interacting with our API.

Step 1. Endpoints and Parameters

Every API journey commences with endpoints—gateways to functionality. Enriching these gateways with descriptive comments acts as a guide for developers navigating through the labyrinth of capabilities. Take, for instance, the scenario of retrieving a user's details.

In this code example below, the <summary> tag provides a concise summary of the endpoint's purpose. The <param> tag expounds on the parameters' roles, and the <returns> tag elucidates the anticipated response.

/// <summary>

/// Retrieve a specific user's details by ID.

/// </summary>

/// <param name="id">The ID of the user to retrieve.</param>

/// <returns>The details of the requested user.</returns>

[HttpGet("{id}")]

public ActionResult<UserDto> GetUser(int id)

{

// Implement your logic here

}

Step 2. Responses

Responses are the soul of API interactions—they hold the outcomes developers eagerly anticipate. Elaborating on these outcomes through descriptive comments crystallizes the understanding. Consider the act of creating a new user. Once more, the descriptive comments underpin the API interaction with insights into its purpose, the nature of the request payload, and the response to be expected as the code example below shows us this.

Step 3. Harnessing OpenAPI's Magic

As these descriptive comments enrobe our API, they forge a pathway for OpenAPI to work its magic. As developers interact with our API documentation, OpenAPI diligently translates these comments into a coherent and structured resource. The documentation reflects the essence of every endpoint, parameter, and response, extending a helping hand to developers striving to navigate the intricacies of our API.

In this code example, we have the comments above; the CreateTask method provides a clear description of the endpoint's purpose, its parameters, and its expected response. When we integrate OpenAPI into our ASP.NET Core application, it utilizes these comments to automatically generate structured API documentation. This documentation helps developers understand the API's intricacies, ensuring that they can interact with it effectively and confidently.

using Microsoft.AspNetCore.Mvc;

using Microsoft.OpenApi.Models;

using System;

using System.ComponentModel.DataAnnotations;

namespace TaskManagementAPI.Controllers

{

[ApiController]

[Route("api/[controller]")]

public class TasksController : ControllerBase

{

// ...

/// <summary>

/// Create a new task.

/// </summary>

/// <param name="taskDto">The task's information for creation.</param>

/// <returns>A confirmation of the task's successful creation.</returns>

[HttpPost]

public ActionResult<string> CreateTask(TaskDto taskDto)

{

if (!ModelState.IsValid)

{

return BadRequest(ModelState);

}

// Implement your logic here

// ...

return Ok("Task created successfully");

}

// Other endpoints and actions

/// <summary>

/// Data Transfer Object (DTO) for task information.

/// </summary>

public class TaskDto

{

public int Id { get; set; }

[Required(ErrorMessage = "Title is required.")]

public string Title { get; set; }

[DataType(DataType.Date)]

[FutureDate(ErrorMessage = "Due date must be in the future.")]

public DateTime DueDate { get; set; }

}

/// <summary>

/// Custom validation attribute for future date.

/// </summary>

public class FutureDateAttribute : ValidationAttribute

{

public override bool IsValid(object value)

{

if (value is DateTime date)

{

return date > DateTime.Now;

}

return false;

}

}

// OpenAPI documentation

public class OpenApiDefinitions

{

public OpenApiInfo Info { get; } = new OpenApiInfo

{

Title = "Peter Task Management API",

Version = "v1",

Description = "An API for managing tasks with comprehensive documentation.",

Contact = new OpenApiContact

{

Name = "Peter",

Email = "[email protected]"

}

};

}

}

}

Step 4. Empowering Developers, Amplifying Usability

With each comment etched in precision, the resulting documentation becomes an invaluable resource. Developers, whether novices or veterans, are equipped with the knowledge necessary to seamlessly engage with our API. Descriptive comments transcend mere code; they encapsulate our API's essence and communicate it to those seeking to harness its power.

In this code example below, we have carefully crafted comments that transcend the boundaries of mere code annotations. They encapsulate the essence of our API's functionality, clarifying its purpose and the expected interactions. Developers, regardless of their experience level, are armed with invaluable insights as they traverse our API documentation. This empowers them to harness our API's capabilities effectively and fully unlock its potential.

using Microsoft.AspNetCore.Mvc;

using Microsoft.OpenApi.Models;

using System;

using System.ComponentModel.DataAnnotations;

namespace TaskManagementAPI.Controllers

{

[ApiController]

[Route("api/[controller]")]

public class TasksController : ControllerBase

{

// ...

/// <summary>

/// Create a new task.

/// </summary>

/// <param name="taskDto">The task's information for creation.</param>

/// <returns>A confirmation of the task's successful creation.</returns>

[HttpPost]

public ActionResult<string> CreateTask(TaskDto taskDto)

{

if (!ModelState.IsValid)

{

return BadRequest(ModelState);

}

// Implement your logic here

// ...

return Ok("Task created successfully");

}

// Other endpoints and actions

/// <summary>

/// Data Transfer Object (DTO) for task information.

/// </summary>

public class TaskDto

{

public int Id { get; set; }

[Required(ErrorMessage = "Title is required.")]

public string Title { get; set; }

[DataType(DataType.Date)]

[FutureDate(ErrorMessage = "Due date must be in the future.")]

public DateTime DueDate { get; set; }

}

/// <summary>

/// Custom validation attribute for future date.

/// </summary>

public class FutureDateAttribute : ValidationAttribute

{

public override bool IsValid(object value)

{

if (value is DateTime date)

{

return date > DateTime.Now;

}

return false;

}

}

// OpenAPI documentation

public class OpenApiDefinitions

{

public OpenApiInfo Info { get; } = new OpenApiInfo

{

Title = "Peter Task Management API",

Version = "v1",

Description = "An API for managing tasks with comprehensive documentation.",

Contact = new OpenApiContact

{

Name = "Peter",

Email = "[email protected]"

}

};

}

}

}

The integration of descriptive comments with OpenAPI nurtures a realm where developers can immerse themselves in the API's essence, thereby fostering a harmonious union between comprehension and usability. As a result, the API becomes a conduit for innovation, enabling developers to channel their creativity with the confidence that they're interacting with a well-documented and empowering resource.

In the intricate dance between descriptive comments and OpenAPI, we orchestrate an experience of unimpeded comprehension. This experience, in turn, fuels the vitality of our API and beckons developers to embark on journeys of innovation, all while enjoying the robust support of a comprehensible and fully-documented API ecosystem.

Versioning Our API

As we embark on the journey of architecting a robust and adaptable API, the importance of versioning takes center stage. The art of versioning bestows upon us the ability to uphold backward compatibility while leaving the gateway open for future enhancements. This process ensures that the intricate tapestry of our API continues to serve both current and future demands. One of the potent methods to wield versioning lies in the inclusion of version numbers—a beacon guiding both developers and clients through the labyrinth of iterations.

Step 1. Embracing the Versioning Paradigm

The foundation of versioning is rooted in a simple yet profound principle: to clearly demarcate each iteration of our API. By assigning a version number to our API, we transform it into a cohesive entity that evolves while preserving its historical roots.

In this code example below, we have the versioning paradigm embraced by including version number v1 in the route of the TasksController class. This version number clearly demarcates the iteration of the API, turning it into a distinct and cohesive entity. As our API evolves, we can introduce new versions, such as v2, while preserving the historical roots of previous versions. This way, our API remains adaptable and backward-compatible, catering to both existing and potential consumers.

using Microsoft.AspNetCore.Mvc;

using Microsoft.OpenApi.Models;

using System;

namespace TaskManagementAPI.Controllers

{

[ApiController]

[Route("api/v1/[controller]")]

public class TasksController : ControllerBase

{

// ...

[HttpGet]

public ActionResult<IEnumerable<TaskDto>> GetTasks()

{

// Implement your logic here

}

// Other endpoints and actions

public class TaskDto

{

public int Id { get; set; }

public string Title { get; set; }

public DateTime DueDate { get; set; }

}

// OpenAPI documentation

public class OpenApiDefinitions

{

public OpenApiInfo Info { get; } = new OpenApiInfo

{

Title = "Peter Task Management API",

Version = "v1",

Description = "An API for managing tasks with version 1.",

Contact = new OpenApiContact

{

Name = "Peter",

Email = "[email protected]"

}

};

}

}

}

Step 2. Selecting the Path of URL-Based Versioning

The landscape of versioning beckons us with diverse routes, each tailored to specific use cases. Among these, the URL-based approach emerges as an epitome of simplicity and adherence to RESTful practices. In the code example below, let's suppose we have an API for tasks, and we're venturing into versioning. Here's how the URL-based approach looks in practice.

[ApiController]

[Route("api/[controller]")]

public class TasksController : ControllerBase

{

// ...

}

[ApiController]

[Route("api/v1/[controller]")]

public class TasksController : ControllerBase

{

// ...

}

In this code example above, we have the addition of /v1/ in the route explicitly indicating the API's version. This way, the existing clients continue to interact with the previous version, while newer clients can access the enhanced version seamlessly.

Step 3. Bestowing Client-Friendly Simplicity

The beauty of the URL-based approach lies in its innate simplicity. Clients intuitively navigate through the API, with version numbers acting as signposts. The result is a streamlined experience that minimizes friction and maximizes engagement.

In this code example, we have the versioning approach demonstrated by including the version number v1 in the route of the TasksController class. This version number serves as a signpost for clients as they navigate through the API. By intuitively including the version in the URL, clients experience a seamless and straightforward interaction. The result is a streamlined experience that reduces friction and encourages engagement. This simplicity in navigation enhances the usability of the API and ensures that clients can easily discover and leverage the features provided by each version.

using Microsoft.AspNetCore.Mvc;

using Microsoft.OpenApi.Models;

using System;

namespace TaskManagementAPI.Controllers

{

[ApiController]

[Route("api/v1/[controller]")]

public class TasksController : ControllerBase

{

// ...

[HttpGet]

public ActionResult<IEnumerable<TaskDto>> GetTasks()

{

// Implement your logic here

}

// Other endpoints and actions

public class TaskDto

{

public int Id { get; set; }

public string Title { get; set; }

public DateTime DueDate { get; set; }

}

// OpenAPI documentation

public class OpenApiDefinitions

{

public OpenApiInfo Info { get; } = new OpenApiInfo

{

Title = "Peter Task Management API",

Version = "v1",

Description = "An API for managing tasks with version 1.",

Contact = new OpenApiContact

{

Name = "Peter",

Email = "[email protected]"

}

};

}

}

}

Step 4. Adaptability for the Future

Versioning isn't a mere strategy; it's a roadmap for evolution. As our API matures, new features and refinements will emerge. With the URL-based versioning approach, accommodating these changes becomes a natural progression. New iterations can be gracefully introduced, maintaining a harmonious balance between innovation and compatibility.

In this code example below, we have the API initially designed with version 1 using the URL-based versioning approach (api/v1/[controller]). As the API evolves, a new version (v2) is introduced by creating a new controller class (TasksControllerV2) with an updated route (api/v2/[controller]). This approach allows for the graceful introduction of new features and refinements while maintaining compatibility with existing clients. Each version has its own set of endpoints, actions, and DTOs, ensuring a harmonious balance between innovation and compatibility.

using Microsoft.AspNetCore.Mvc;

using Microsoft.OpenApi.Models;

using System;

namespace TaskManagementAPI.Controllers

{

// Original version (v1)

[ApiController]

[Route("api/v1/[controller]")]

public class TasksController : ControllerBase

{

// ...

[HttpGet]

public ActionResult<IEnumerable<TaskDto>> GetTasks()

{

// We Implementation our logic here

}

// Other endpoints and actions

public class TaskDto

{

public int Id { get; set; }

public string Title { get; set; }

public DateTime DueDate { get; set; }

}

// OpenAPI documentation

public class OpenApiDefinitions

{

public OpenApiInfo Info { get; } = new OpenApiInfo

{

Title = "Peter Task Management API",

Version = "v1",

Description = "An API for managing tasks with version 1.",

Contact = new OpenApiContact

{

Name = "Peter",

Email = "[email protected]"

}

};

}

}

// New version (v2) introduced

[ApiController]

[Route("api/v2/[controller]")]

public class TasksControllerV2 : ControllerBase

{

// ...

[HttpGet]

public ActionResult<IEnumerable<TaskDtoV2>> GetTasks()

{

// Implementation our logic here for version 2

}

// Other endpoints and actions specific to v2

public class TaskDtoV2

{

public int Id { get; set; }

public string Title { get; set; }

public DateTime DueDate { get; set; }

public string Priority { get; set; } // Additional property in v2

}

// OpenAPI documentation for version 2

public class OpenApiDefinitionsV2

{

public OpenApiInfo Info { get; } = new OpenApiInfo

{

Title = "Peter Task Management API",

Version = "v2",

Description = "An API for managing tasks with version 2.",

Contact = new OpenApiContact

{

Name = "Peter",

Email = "[email protected]"

}

};

}

}

}

Step 5. Harnessing Header-Based Versioning

While the URL-based approach garners favor for its simplicity, another avenue is header-based versioning. This method involves specifying the version number in the request header. While it offers flexibility, it may require more client-side effort to incorporate the header. Below is a code example showing us the Header-based versioning.

// Header-based versioning

[ApiController]

[Route("api/[controller]")]

public class TasksController : ControllerBase

{

// ...

[HttpGet]

[ApiVersion("1.0")] // Version specified in the header

public ActionResult<IEnumerable<Task>> GetTasks()

{

// Implementation of your logic here

}

}

Step 6. In Summation

Versioning our API encapsulates the ethos of evolution within its framework. By employing version numbers in URLs or headers, we extend an olive branch to both current and future stakeholders. We align ourselves with RESTful principles, ensuring compatibility and simplicity for clients. This meticulous approach doesn't just enhance our API; it nurtures an ecosystem that thrives on the continuous synergy between innovation and accessibility.

In this code example below, we have the API versioned using URL-based versioning (api/v1/[controller] and api/v2/[controller]). Each version is encapsulated within its own controller class (TasksController and TasksControllerV2). Additionally, custom OpenAPI documentation classes (OpenApiDefinitions and OpenApiDefinitionsV2) are defined to describe each version of the API. The custom ApiVersionAttribute demonstrates how we can create our own versioning attributes to encapsulate versioning behavior, providing a seamless way to apply versioning across multiple controllers. This approach aligns with RESTful principles, allowing for compatibility and simplicity for clients while fostering a dynamic ecosystem that thrives on innovation and accessibility.

using Microsoft.AspNetCore.Mvc;

using Microsoft.OpenApi.Models;

using System;

namespace TaskManagementAPI.Controllers

{

// Version 1

[ApiController]

[Route("api/v1/[controller]")]

public class TasksController : ControllerBase

{

// ...

}

// Version 2

[ApiController]

[Route("api/v2/[controller]")]

public class TasksControllerV2 : ControllerBase

{

// ...

}

// OpenAPI documentation for version 1

public class OpenApiDefinitions

{

public OpenApiInfo Info { get; } = new OpenApiInfo

{

Title = "Peter Task Management API",

Version = "v1",

Description = "An API for managing tasks with version 1.",

Contact = new OpenApiContact

{

Name = "Peter",

Email = "[email protected]"

}

};

}

// OpenAPI documentation for version 2

public class OpenApiDefinitionsV2

{

public OpenApiInfo Info { get; } = new OpenApiInfo

{

Title = "Peter Task Management API",

Version = "v2",

Description = "An API for managing tasks with version 2.",

Contact = new OpenApiContact

{

Name = "Peter",

Email = "[email protected]"

}

};

}

// Custom versioning attribute

[AttributeUsage(AttributeTargets.Class, Inherited = false, AllowMultiple = false)]

public class ApiVersionAttribute : RouteAttribute

{

public ApiVersionAttribute(string version) : base($"api/{version}/[controller]")

{

}

}

}